Elastic HPC Jobs - OFS

Example HPC jobs with CCQ and OrangeFS

Setup to Launch a Sample Job

To setup the sample job, first ssh into the login instance and copy the sample jobs to the shared file system.

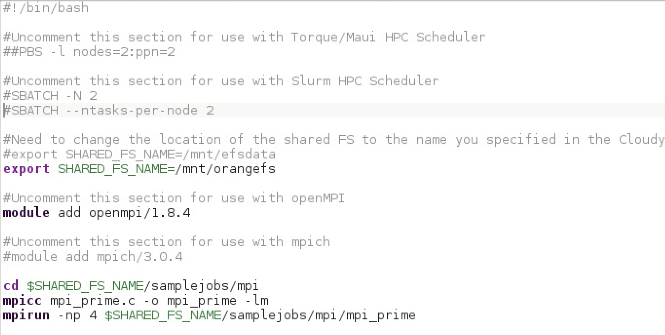

Edit the sample job you would like to run. Remove the extra comment (#) from the scheduler you are running. In this example the Slurm scheduler is used. Uncomment the sharedFS option you selected. Here we are using OrangeFS as an example.

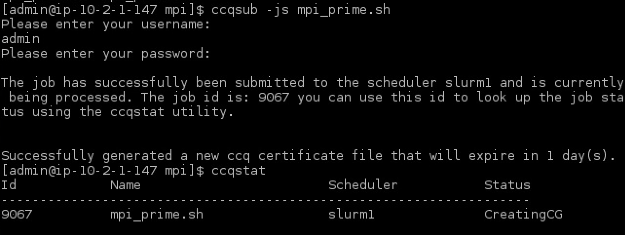

Launch the job by running the ccqsub command. Check the status with ccqstat:

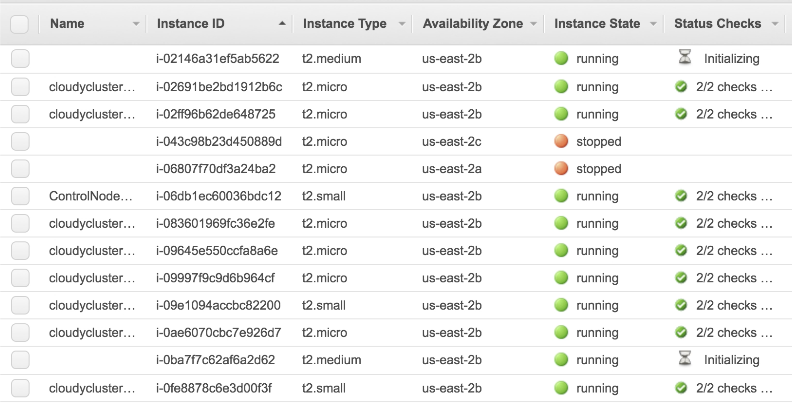

As shown below, the two t2.medium nodes for the jobs are automatically launching

You can edit the file, save some other node configurations and launch them as well.

You can also run spot instance jobs by adding directives to the job script.

#CC -us yes (use spot)

#CC -sp .15 (the price of the bid)

#CC -it c4.2xlarge (the instance type)

Launch the job and check the status.

When the job is in the:

- Pending state the job is waiting to be processed by CCQ

- CreatingCG state it is Creating the instances

- ExpandingCG state the job is waiting for the current Compute Group to expand and create new Compute Instances

- Provisioning state it is doing final node setup

- CCQueued state the job is in the process of being submitted to the scheduler

- Running state when it is handed off to the scheduler, which is slurm in this job.

- Completed state it has finished running successfully

- Error state the job encountered an error during execution. The error will be logged in the Administration -> Errors tab within the CloudyCluster UI.

The completed status is displayed when the job is done.

The Job Status Output is sent to the home directory or the directory the job was launched from.

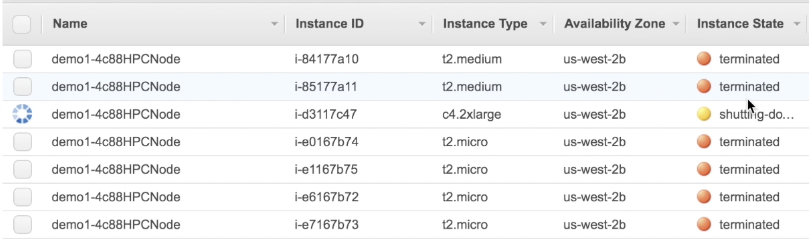

When there are no more jobs for that instance type, the instances are terminated.

Now you can pause your HPC Environment if you do not have any more jobs to run. Once everything is paused, you can also stop the control node from the AWS Console and restart it when you are ready to run jobs again.

To assist researchers, a wide variety of common open HPC tools and libraries are pre-installed and configured, ready to run and ready to scale.